From control to orchestration: leading Product in the age of AI

A field report on what it really takes to transform mindsets, rituals and systems when AI joins the team

Three months ago, a growth-stage tech company brought me in to coach their product team through a challenge they could feel, but couldn’t quite name.

They had artificial intelligence models in production. Product managers were experimenting with gpt-based tools. Executives were nodding along to plans filled with automation ambitions.

But something was off.

Despite the tooling, progress was stalling. Teams didn’t trust the models. Stakeholders were confused. And product leadership still operated like it was 2015: weekly one-to-ones, detailed requirement documents, quarterly planning and output-driven OKRs.

They didn’t need better tools. They needed a different operating system.

What this is and what it is not

This wasn’t a full-scale transformation programme. We didn’t redesign the structure or launch a year-long change initiative. This was a focused 90-day intervention: targeted coaching, deliberate experiments, hands-on reworking of product rituals and decision-making.

We didn’t fix everything. What we did was open things up. We exposed friction, made blind spots visible and introduced new rhythms.

The real change (cultural, behavioural and systemic) takes 6 to 12 months of discipline but in 90 days, we built the momentum and belief that it could be done.

The before state: AI without alignment

Here’s what we found:

AI models were generating specs, summaries and analysis but humans didn’t trust them

Planning remained linear and time-based rather than outcome- or risk-driven

OKRs tracked delivery, not the performance or integrity of the AI

PRDs were bloated with information the AI already handled, yet lacked real human context

Most critically, the product managers had lost clarity on their purpose. If AI was doing the heavy lifting, what exactly were they supposed to be doing?

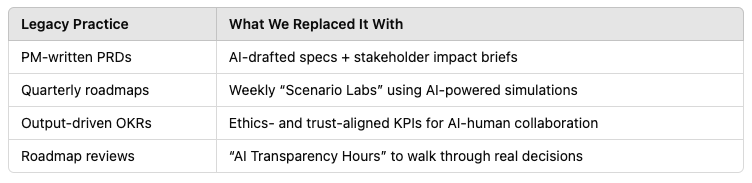

Phase one: retire rituals that no longer serve

We began by dismantling legacy processes that no longer aligned with reality.

None of this was theoretical. We implemented it within real product initiatives, in live delivery contexts, with legal, operations and engineering all at the table.

Phase two: equip the team with practical tools

We introduced three specific tools that unlocked real movement:

Trust dashboards

A single view showing:

AI decision accuracy

Frequency of human overrides

Indicators of customer impact

One team used this to identify and fix a model that was misclassifying spanish-speaking customers, well before it escalated.

Scenario sessions

Rather than fixed plans, we hosted weekly simulation-based discussions:

What happens if this model fails silently?

What are the unintended consequences of faster delivery?

Where are we trading customer trust for internal efficiency?

These sessions brought strategy back into the room.

Narrative briefs

We replaced long-form specifications with focused decision briefs, answering:

Where does this impact trust?

What are the known failure risks?

Where must humans remain actively involved?

Legal, engineering and commercial teams were finally aligned on intent, not just scope.

What changed after 90 days

This was not a transformation. But the signs of change were clear:

Product managers shifted from producing documents to interpreting AI and stress-testing trade-offs

Trust dashboards became a shared tool across functions

One team identified a biased routing model before launch, due to a simple override alert

Stakeholders began asking about decision boundaries, not just delivery dates

We did not finish the work: we started it, properly.

Technical feasibility: what you need to make this work

This is not speculative. Most of what we did is entirely feasible today if you have the right foundations.

Ready now:

AI-generated specifications: via internal gpt assistants or tools like notion or coda

Override and audit logs: built from basic observability and decision tracking

Scenario analysis: using gpt-4 or similar for structured prediction work

Trust dashboards: simple if models are instrumented and monitored

Prerequisites:

Clean data pipelines

Cross-functional collaboration (especially with data and infra)

Clear boundaries between automation and judgment

Leadership backing for trust over throughput

If your foundations are not in place, focus there first. Don’t layer AI on top of dysfunction.

Mindset and organisational design: the harder part

Technology is straightforward. People are not.

The pm identity gap

Most product managers were trained to define requirements and manage delivery. When AI automates both, many default to either policing or passivity.

They need to shift to become sensemakers – able to interpret, challenge and explain AI in business terms.

The executive illusion of control

Most leaders still expect predictability. But AI introduces variability. Outcomes are no longer linear and accountability is shared.

Leaders need to move from control to orchestration and stop asking for plans that ignore uncertainty.

The missing system design

Most companies treat AI as a bolt-on. It needs to be treated as a system: with feedback loops, human checkpoints and clear failure protocols.

Without this, AI becomes a black box and product loses its credibility.

If you're about to start

If you're a CPO, Head of product or Transformation lead, here's what we recommend:

1. Audit your rituals

Which meetings, documents or reviews still assume that humans own all execution? Change them.

2. Clarify the human role

Where do humans matter most?

Interpreting decisions

Overriding for ethical reasons

Translating outcomes to stakeholders

Make these roles explicit and respected.

3. Instrument trust early

Track not just performance, but:

Where humans intervene

How decisions are explained

How customers respond

Build a culture of human oversight, not just efficiency.

4. Train for ambiguity, not velocity

Invest in capability-building for:

Scenario thinking

Ethical framing

Stakeholder storytelling

Your best product managers will be the ones who can live in the grey, not the ones who deliver fastest.

A weekly habit that changed everything

Each week, we closed with a reflection ritual:

One decision the AI got right

One judgment where a human improved the outcome

One moment of uncertainty worth discussing

It started as awkward. By week six, it was a highlight. By week nine, it was embedded.

This is leadership now: not knowing all the answers but making space for better questions.

The road ahead

The company we worked with is still early in the journey. The bigger impact, on customer outcomes, innovation velocity and systemic trust, will emerge over the next 6 to 12 months.

But one thing is already clear.

The organisations that succeed with AI will not be the ones with the best models. They will be the ones with the best human leaders able to hold ambiguity, build trust and design for judgment.

If your product team is still chasing output while AI makes the real decisions, it's time to rewire. And it won't start with a tool. It starts with how you lead.